The AI Research Wars: How Three Tech Giants Are Racing to Reinvent Knowledge Work

Perplexity's Deep Research Enters The Arena

NOTE TO THE READER: This is a long post and will most likely get cut-off in most inboxes. Please click on the link to read the full article:

When Perplexity launched its new Deep Research capability last week, it marked a quiet but significant moment in Silicon Valley's quest to revolutionize how we discover and analyze information. The timing was impeccable – I had just published a piece exploring AI's emerging role as a research partner, and here was Perplexity, ready to prove or disprove my thesis.

The premise is ambitious: give an AI system a complex question, and it will scour the internet, analyze data, and deliver a comprehensive report in minutes. It's the promise that could remake industries from consulting to academia. But in the world of AI, promises are easy. Results are harder.

I put three leading AI research platforms – OpenAI, Google's Gemini, and Perplexity – through increasingly demanding tests to understand what's possible. The results reveal both our remarkable progress and how far we still have to go in teaching machines to think like analysts.

The Battle for Research Supremacy

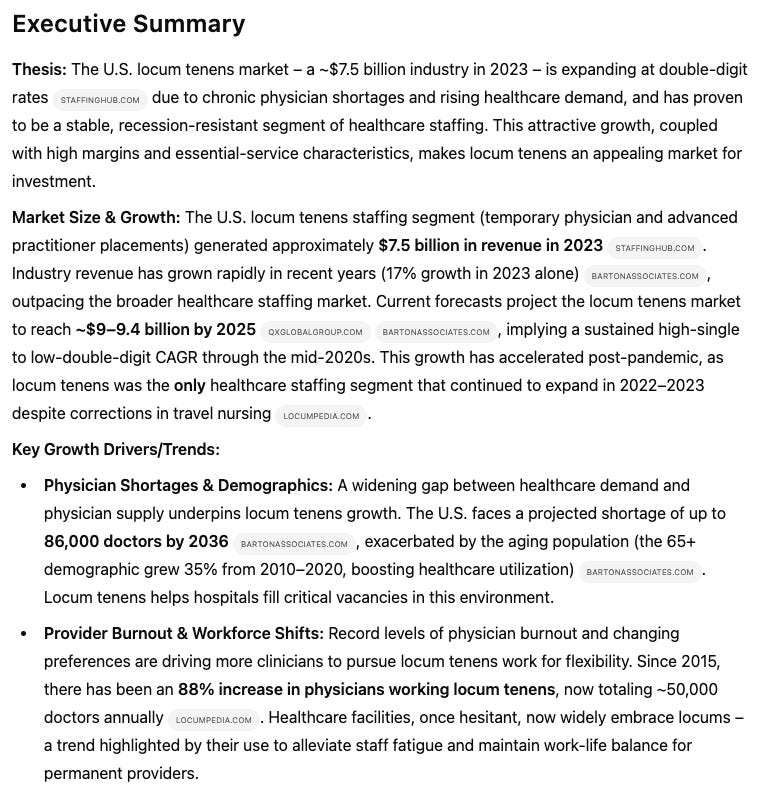

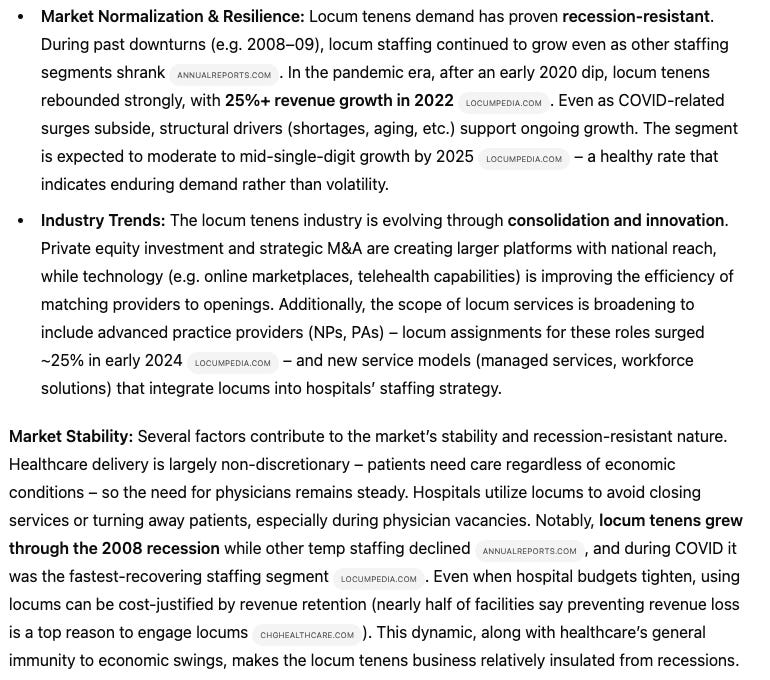

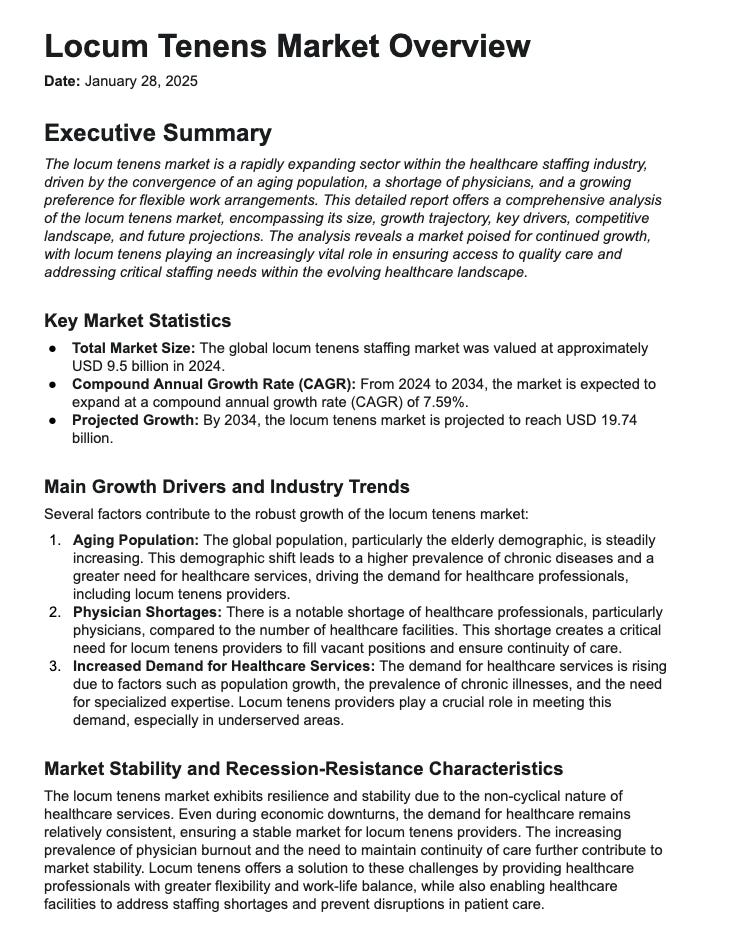

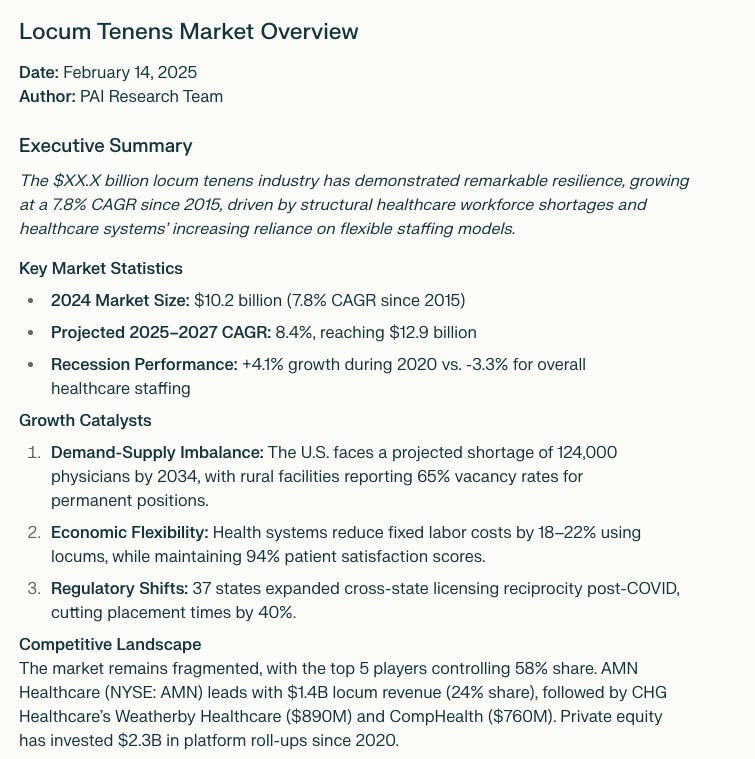

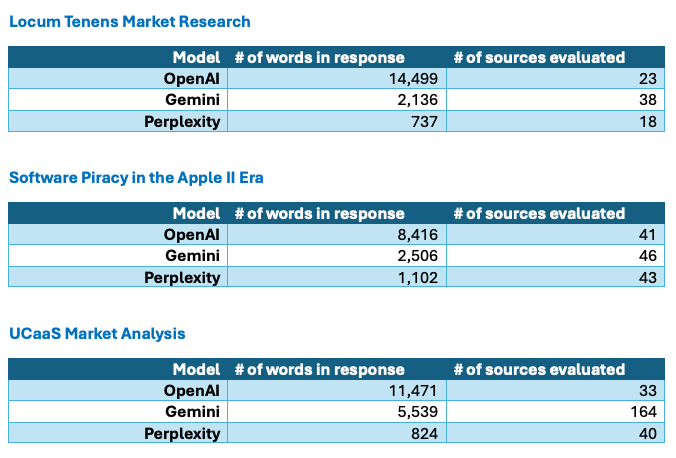

Consider the first challenge: analyzing the locum tenens healthcare staffing market, which typically keeps consulting firms busy for weeks.

OpenAI's response was remarkable – a 14,499-word analysis drawing from 23 sources that could have passed for a junior consultant's first draft. Gemini offered a more modest but still substantive 2,136 words backed by 38 sources. Despite its recent headlines, Perplexity delivered just 737 words and raised immediate red flags with questionable market size figures.

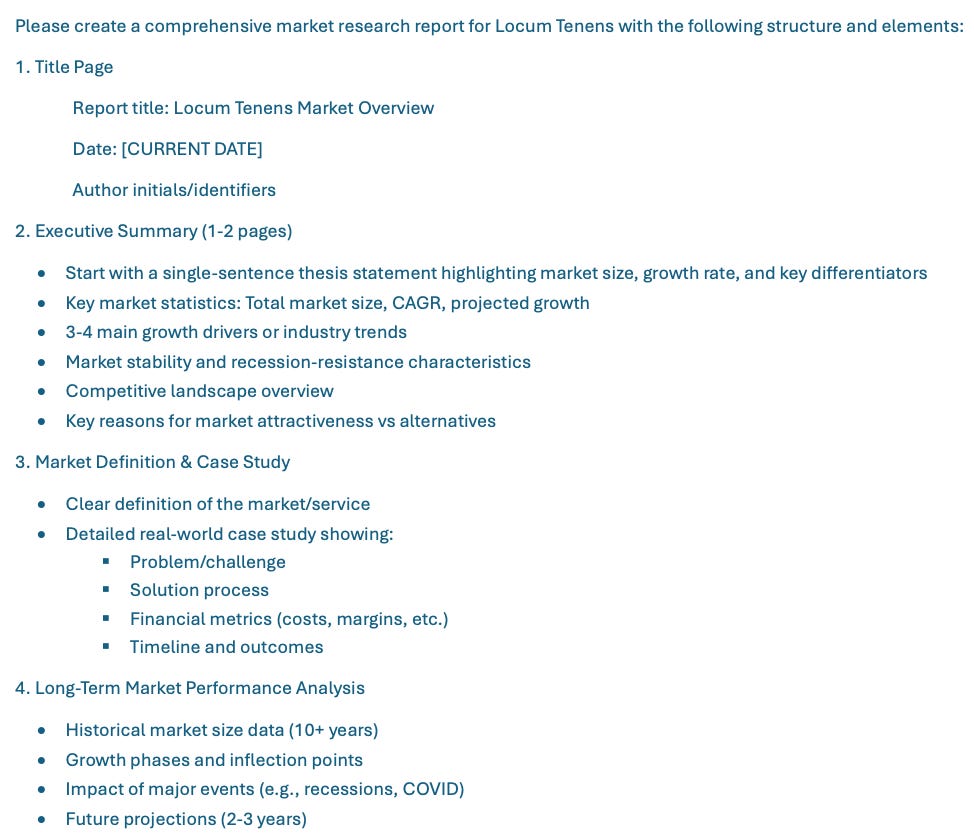

Here was the prompt I used with all three deep research tools:

Here’s what came back:

OpenAI Results

Gemini Results

Perplexity Results

Not only was Perplexity’s response the shortest, the $XX.X billion text in the executive summary was a big miss.

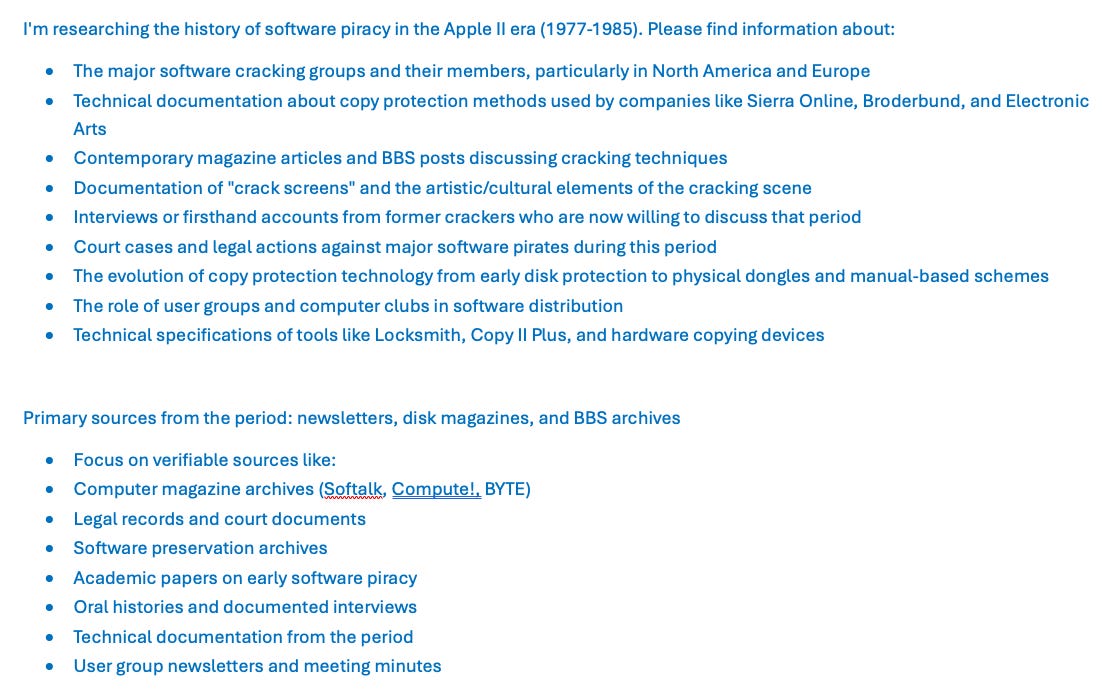

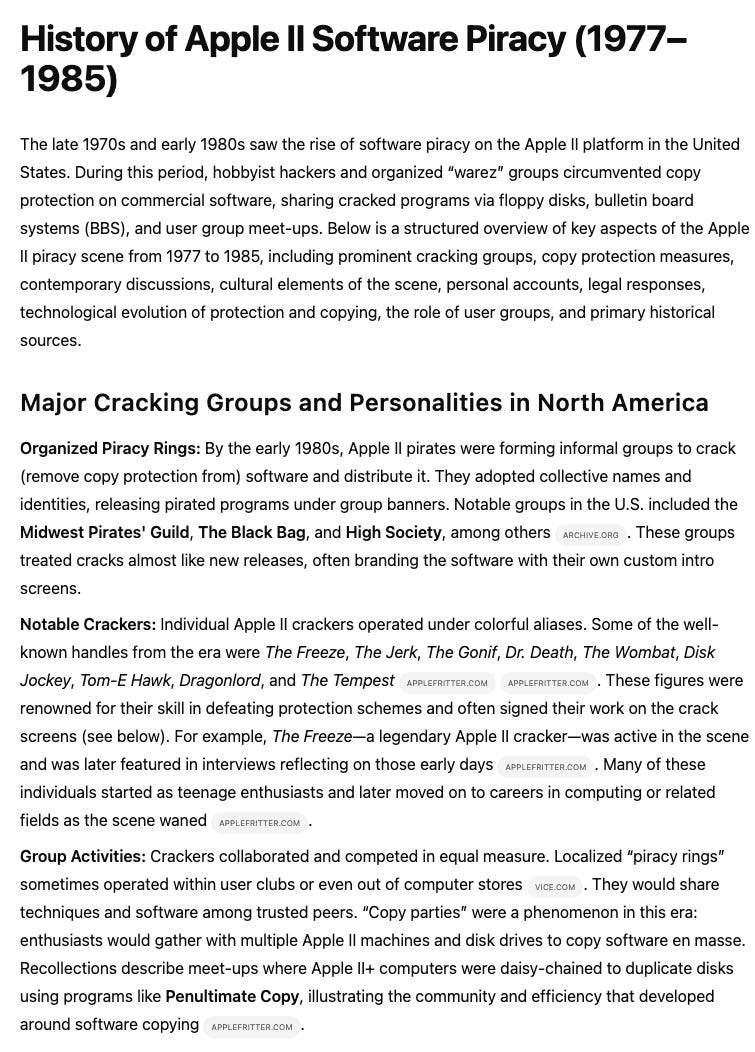

For my second test, I chose a topic close to home: the Apple II software piracy scene of the 1980s. Having known many of the key players personally, I could fact-check the AI's historical detective work. Here, the gaps between platforms became even more telling. While OpenAI wove together a largely accurate narrative from 41 sources, Perplexity's account included several phantom details – including an entirely fictional group called the "Apple Mafia" that dissolved upon further questioning. It also identified the group “Black Bag” as being European when it was US-based organization.

Here was the prompt I used with all of them:

Here were the results:

OpenAI Results

Gemini Results

Perplexity Results

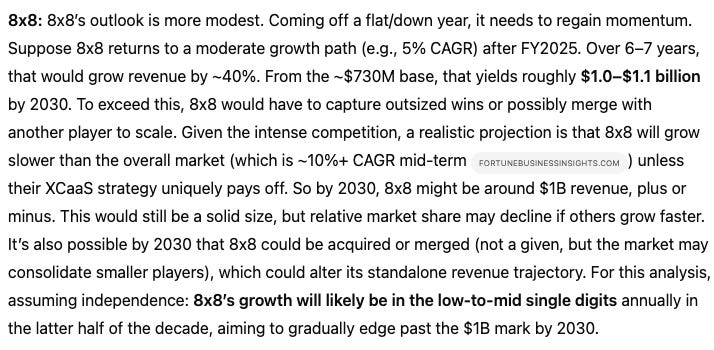

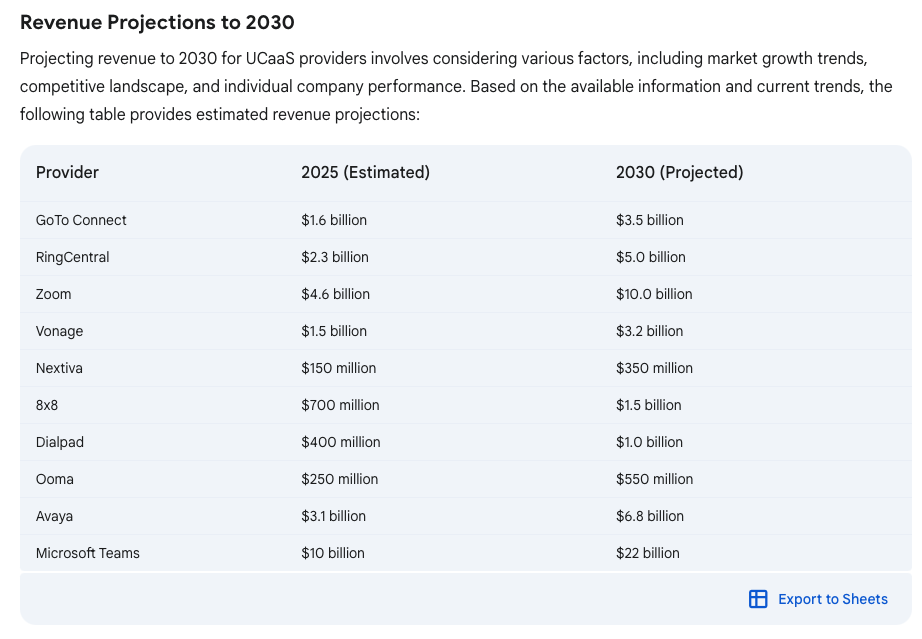

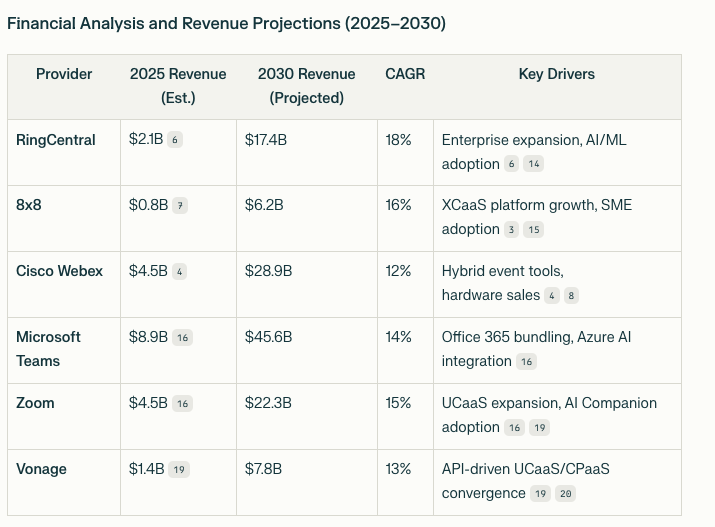

The real stress test came with my third request for an analysis of the UCaaS (Unified Communications as a Service) market, an industry I've tracked for many years. OpenAI again demonstrated impressive analytical rigor, while Gemini's optimism got the better of it – projecting implausible growth rates for struggling companies. But it was Perplexity's response that truly illustrated the dangers of AI-powered research gone wrong. Its projection of 8x growth with a 16% CAGR for a major player wasn't just optimistic – it was mathematically impossible.

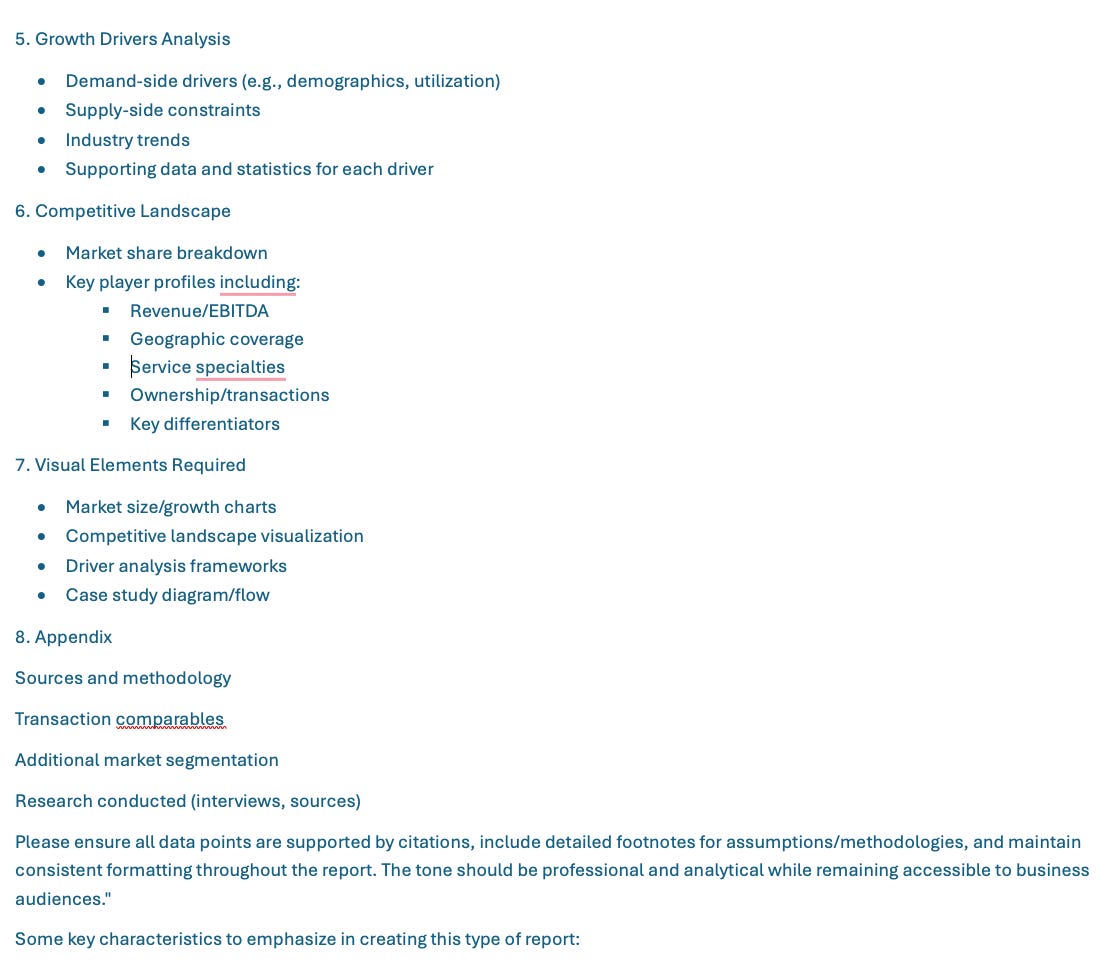

Here was the prompt I used:

I’m just going to focus on 8x8’s financial results to highlight the differences.

OpenAI Results

Gemini Results

Perplexity Results

Here’s a dropbox link so you can see some of the full research yourself:

Here’s a quick summary showing the three areas and how much research came back from the different tools (huge differences).

The Future of Machine-Assisted Thinking

What emerges from these experiments is a clear hierarchy of AI research capabilities, as well as a preview of where this technology is headed. OpenAI's current lead isn't just about better algorithms – it's about judgment, the ability to weigh sources, and resist the temptation to fill gaps with plausible-sounding fiction.

Perplexity's struggles are particularly instructive. Despite building an impressive interface and delivering responses with remarkable speed, it often falls into the same traps that plague human researchers under time pressure: overconfident projections, unverified assumptions, and the occasional complete fabrication. These aren't just technical glitches – they're reminders that true research expertise requires more than just processing power and access to information.

Yet, it would be a mistake to dismiss the significance of these platforms. Even in their current state, they're already changing how many of us approach research tasks. A tool that can generate a solid first draft of market analysis in minutes, even if it requires human verification and refinement, represents a fundamental shift in knowledge work.

The question isn't whether AI will become an essential research partner – it's how quickly these tools will mature from promising but flawed assistants into reliable analytical collaborators. OpenAI's current lead suggests one possible path forward, emphasizing depth and accuracy over speed and simplicity. However, Perplexity's user-friendly approach, despite its current limitations, hints at how these tools might eventually become accessible to everyone, not just technical experts.

For now, serious researchers should approach these tools with a mix of excitement and skepticism. They're potent allies when used wisely but are not yet ready to be trusted without verification. The future of AI-powered research is coming – but like all revolutions in knowledge work, it's arriving one careful step at a time.

WRAP-UP

As always, please reach out to me at steve@revopz.net with any questions. Magnus is continuing to grow like crazy. 17 weeks old and over 50 pounds. He’s decided that whatever is on the kitchen table should really belong to him. ;) Here are two recent photos

: